Bringing discipline to enterprise Machine Learning

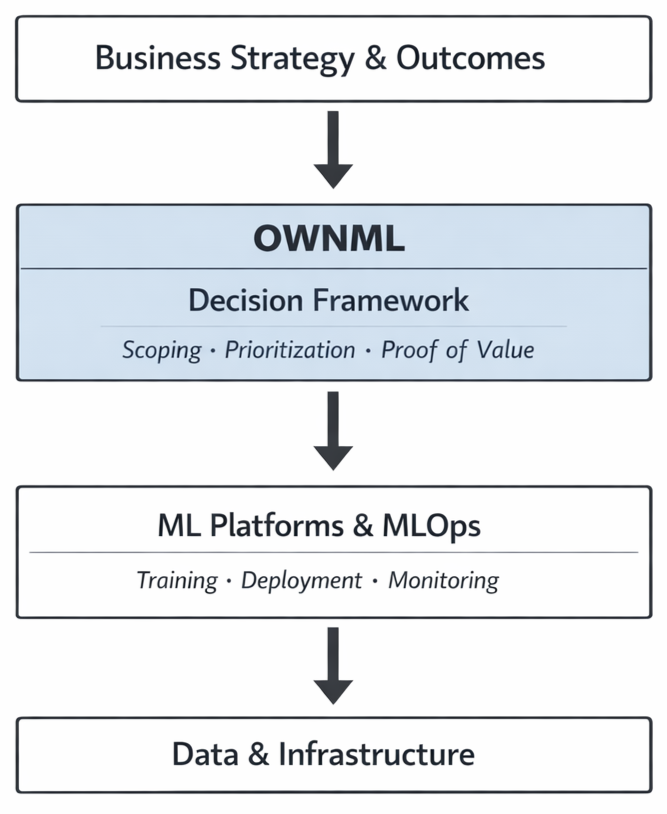

OWNML helps scope, prioritize, and govern Machine Learning across teams — so the right ML work gets funded and scaled with confidence.

Most organizations don’t lack ML talent.

They lack a shared way to decide what to build.

Across organizations, ML teams face the same patterns:

Too many possible ML projects, not enough focus

Excitement around GenAI and agents, but unclear scope or boundaries

Projects stall when there’s no clear “customer” for the prediction

Value discussed in model metrics, not in business terms

Each team works differently, making results hard to compare or scale

The result is fragmented effort, slow progress, and growing skepticism — even when teams are doing solid technical work.

A shared framework to bring discipline to ML

OWNML provides a common structure teams use to scope ML work, align with stakeholders, and evaluate progress in business terms.

What teams get with OWNML

-

A common structure and vocabulary to scope, compare, and discuss ML work across teams.

-

Each ML initiative starts with explicit assumptions, success criteria, and decision context—reducing overbuilding and rework.

-

One-pagers and scorecards that translate ML progress into business terms, supporting prioritization and funding decisions.

-

Early experiments are guided by assumptions and impact simulation, making it easier to decide whether to scale, pivot, or stop.

-

Teams keep their technical freedom, while leadership gains comparability and governance across the ML portfolio.

Trusted by ML teams in large organizations

OWNML is created by the author of the Machine Learning Canvas, a framework used by thousands of practitioners worldwide and adopted by teams in large organizations.