Machine Learning Canvas v1.1: change log

Earlier this month (April 2021), I released version v1.1 of the Machine Learning Canvas. It’s grown more stable in the last couple of years, so last November, instead of updating from v0.4 to v0.5, I decided to go straight to v1.0. After a couple of months of experience with it, coaching a few companies in their usage of MLC v1.0, I made a couple of tweaks and ended up with v1.1.

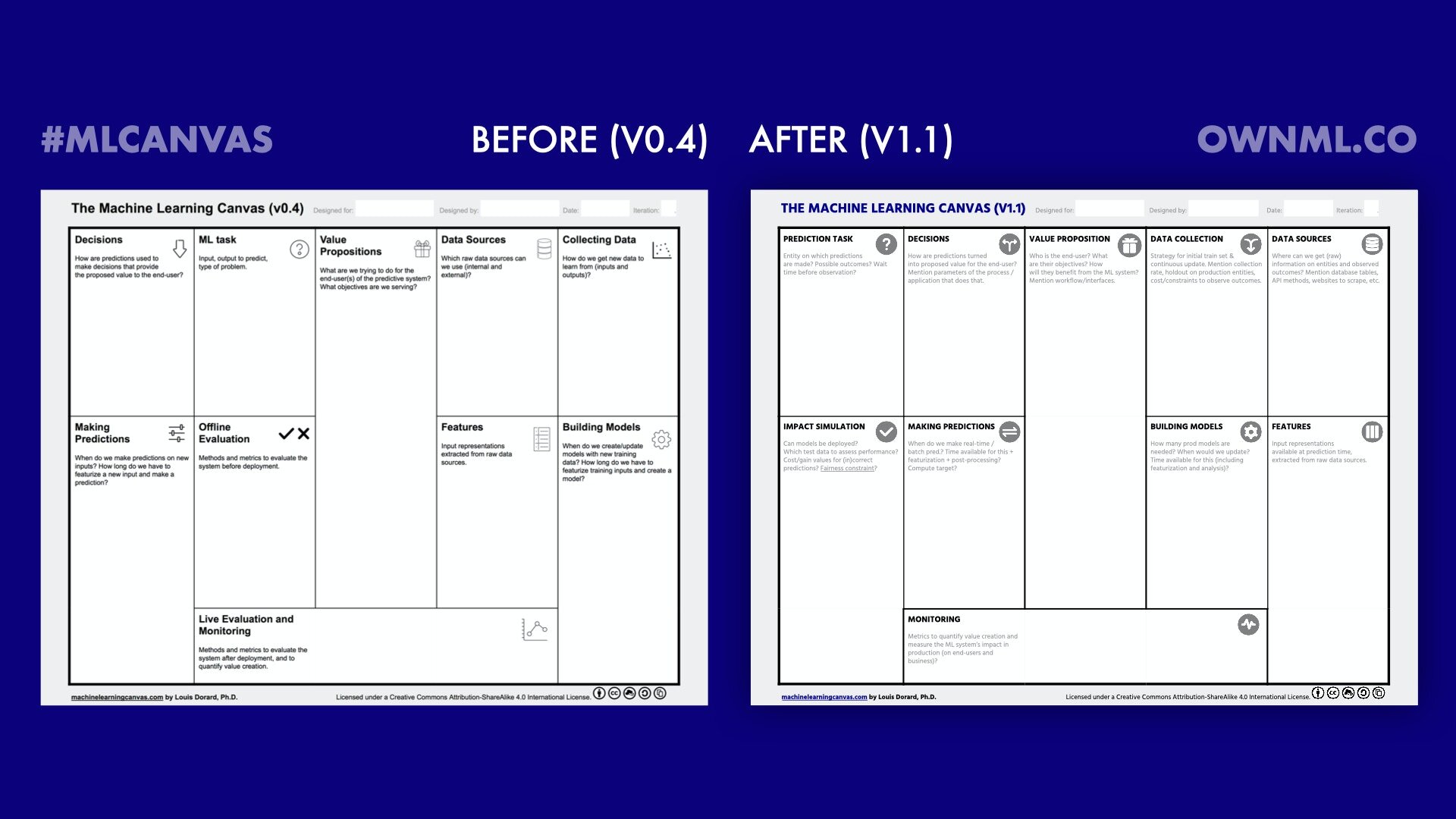

Let's have a look at all those changes since v0.4. I’ve changed the ordering of some of the boxes, in a way that makes the structure of the MLC easier to understand (and memorize). I’ve also refined the boxes’ headers and prompts, to help fill the MLC in the right way.

These changes have a slight impact on the scope of the MLC. Since v0.4, it's become increasingly important to adopt a user-centered and responsible approach when designing intelligent systems that are based on ML: .

User-centered design → there is now more emphasis on the end-user, their workflow, and the interface with the system, under Value Proposition.

Responsible ML/AI:

Offline Evaluation now mentions fairness constraint

Building Models now mentions their “analysis”, which should include global model explanations

Making Predictions now mentions the post-processing of predictions, which can include single prediction explanations.

Changes to the layout: reordering

Decisions and Data Collection relate to the integration of the ML system in the domain of application. They were brought closer to the center of the MLC, next to Value Proposition. This also makes sense because the left-most box is ML Task, then followed by Decisions, which makes the connection with how value is provided.

Making Predictions and Building Models relate to the predictive engine which is at the core of the ML system. Same story here, they were also brought closer to the center. Doing this also gives more space for a long list of Features, and for Offline Evaluation, which typically contains a lot of information; you can also end that block with information on the minimum performance value, for metrics that can be computed offline, but could also be monitored live (and Live Monitoring is right next to the bottom of that box!).

Changes to the headers and prompts

Here is a review of the 10 boxes of the MLC, highlighting changes to the headers and prompts.

Value Proposition:

Before (v0.4): What are we trying to do for the end-user(s) of the predictive system? What objectives are we serving?

After (v1.0 onwards): Who is the end-user? What are their objectives? How will they benefit from the ML system? Mention workflow/interfaces.

Live Monitoring → Monitoring:

Before: Methods and metrics to evaluate the system after deployment, and to quantify value creation.

After: Metrics to quantify value creation and measure the ML system’s impact in production (on end-users and business)

ML Task → Prediction Task: Changed the terminology. "Input" was interpreted by some as values manipulated by the machine, whereas what was meant was the real-world object, or "entity", on which predictions are to be made. "Output" was replaced by "outcome", which also sounds less technical and closer to something that's observed in the real world. Chose to focus on prediction tasks, instead of covering all ML tasks (including unsupervised learning). ML-powered predictions is how most of the value is created with "AI" today. This focus allows to highlight the time dimension of things, and the need to wait: from the moment an entity is observed and a prediction is requested, to the moment an outcome of interest can be observed.

Before (v0.4): Input, output to predict, type of problem.

v1.0: Type of task? Input object? Output: definition, parameters (e.g. prediction horizon), possible values?

After (v1.1): Type of task? Entity on which predictions are made? Possible outcomes? Wait time before observation?

Features: Emphasized that they should be available at prediction time.

Data sources: Made it a bit more specific by making a distinction between input data (aka "entities" in Prediction Task) and output data (aka "observed outcomes"), and suggesting to mention databases and tables, or APIs and methods of interest.

Before (v0.4): Which raw data sources can we use (internal and external)?

v1.0: Which raw data sources can we use (internal, external)? Mention databases and tables, or APIs and methods of interest.

After (v1.1): Where can we get (raw) information on entities and observed outcomes? Mention database tables, API methods, websites to scrape, etc.

Data collection (brand new prompt!): Added a focus on continuous data collection (not just initial train set). Highlighted the differences in input and output collection (aka occurence of new entities and observation of outcomes), the cost of the latter, and finally, holding production entities out of the decision process (to deal with feedback loops).

Before (v0.4): How do we get new data to learn from (inputs and outputs)?

v1.0: Strategy for initial train set, and continuous update. Collection rate? Holdout on prod inputs? Output acquisition cost?

After (v1.1): Strategy for initial train set and continuous update. Mention collection rate, holdout on production entities, cost/constraints to observe outcomes.

Making Predictions: This was already mentioning the featurization that needs to happen before predictions, and now it also mentions the post-processing, which could include prediction explanations, or preparing predictions for usage in the decision-making process. I’ve also added a focus on the compute target (which leads to considering things such as memory constraints, in addition to latency constraints)

Before: When do we make predictions on new inputs? How long do we have to featurize a new input and make a prediction?

After: When do we make real-time / batch predictions? Time available for this + featurization + post-processing? Compute target?

Building Models: Clarified so that you get to think about how many models are needed, e.g. 1 per end-user, 1 per locality, etc. Similarly to Making Predictions, there was a mention of the featurization that needs to happen before model building, and now there’s also a mention of the analysis of the model that needs to happen afterwards. This includes things such as computing global model explanations, and testing the model to see if it can be safely deployed. The compute target is not mentioned here, because it’s likely to be more flexible than for making predictions, and less of a bottleneck.

Before: When do we create/update models with new training data? How long do we have to featurize training inputs and create a model?

After: How many production models are needed? When would we update? Time available for this (including featurization and analysis)?

Offline Evaluation → Impact Simulation (+ brand new prompt!): This wasn’t specific enough, but the new version now highlights that this is about decide whether it’s ok to deploy in production or not, and simulating the impact of predictions + decisions. Note that we want to evaluate decisions, not just predictions. This simulation is described via the test data on which predictions will be made (how is it collected? over which period of time?) and via the cost and gain values associated to (in)correct decisions (it’s best to avoid “abstract” metrics and to focus instead on domain-specific ones).

Before (v0.4): Methods and metrics to evaluate the system before deployment.

v1.0: Simulation of the impact of decisions/predictions? Which test data? Cost/gain values? Deployment criteria (min performance value, fairness)?

After (v1.1): Can models be deployed? Which test data to assess performance? Cost/gain values for (in)correct predictions? Fairness constraint?

Looking forward to seeing you make the best use of this new version of the MLC!